On mentorship

A couple of months ago I had a conversation with a fellow employee – let’s call him Balgruuf – who decided to quit the grind and become a career coach. Shortly after I’ve witnessed them interact with our mutual acquaintance – Farengar – who was in a difficult spot in their career. Balgruuf was enthusiastically instructing on what are the exact steps Farengar must take, and in what order. It generally felt like Balgruuf had this whole career thing figured out, and Farengar just stumbled along. Needless to say, Farengar quickly changed the topic.

I haven’t stopped thinking about mentorship since that day. In the 10 or so years of my career so far, I’ve had a number of mentors - both formal and informal. I also get the opportunity to mentor people around me – both sides of the relationship really fascinate me.

I’ve had some mentors who worked really well for me - and some who didn’t. I also had more different level of success with mentoring people myself. And one of the defining factors in a successful experience was this: people conflate mentorship with giving advice – two different, but oh-so-close feeling things. Let me elaborate on the difference with a tangentially related example.

Sometimes my wife comes home and shares frustrations that inevitably arise after a long day at work. There’s little to no room for my input, because she needs somebody to just listen, or maybe a rubber duck to talk at. And sometimes my better half wants to hear my thoughts on the subject. Needing to vent and asking for advice are two completely distinct scenarios in this case.

Just like in my home life, sometimes people come looking for an advice. But more often than not, they’re looking for mentorship.

Giving advice is prescriptive, while mentorship is more nuanced, and takes more finesse from both parties.

In Relationomics, Randy Ross discusses a role that relationships play in personal growth. He talks about the harmful “self help” culture which discounts the value of human element in self-development.

This is the gap where mentorship fits in. There’s one huge “but” though, and that’s the fact that the person needs to be ready for the specific feedback they might be getting.

Mentor is there to guide the internal conversation, encourage insight, and suggest a direction. Mentor is there to identify when someone who’s being mentored is heading in a completely wrong direction, or not addressing the elephant in the room.

In fact, I’ve been noticing a trend at Google to avoid trying to use the word “mentorship” overall, to avoid the go-to advice slinging attitude of your everyday Balgruuf. And it’s been a helpful trend - since framing mentorship relationship around guidance and reminding mentors to let those who are mentored to drive the growth is crucial for healthy peer to peer learning.

Even if so-called mentor has some aspect of life completely figured out for themselves, the person who’s mentored must drive the whole journey. Otherwise there’s really no room for growth in that relationship.

Writing for fun

I’ve had this blog since 2012, and I’m only now getting close to my 100th post. All because I’m a perfectionist, which sure as hell didn’t make me a better writer.

I love writing, and I feel like I’m getting better after every piece I write - be it publishing blog posts, journaling, countless design docs, navigating email politics, or writing a book. I write a lot, about many different topics - if I’m interested in the topic for at least a few hours - you can bet I’ll write about it.

Then how come this blog barely gets one post per month?

I meticulously research and edit, generously discard drafts, and it often takes me days strung across weeks to put something together. Something that I feel is worthy of being displayed next to my name. Perfectionism at its core, attached to hobbyist web content.

I struggled with this when writing Mastering Vim too - the first edition was rushed out by the publisher long before it was ready, with various errors and inconsistencies in it, and a few downright unfinished bits. It took over a year of me avoiding even thinking about the book and two more editions to become comfortable with merely sharing that I published a book.

And all of this sucks the joy out of writing. I’m not a for-profit writer. It’s not part of my career path, and I obviously didn’t go to school for it (not that I went to school for anything else). And it sure as hell shouldn’t be grueling to have to come up with what to write.

This blog has a rather modest following - it brings in around 3,000 readers a month, with a few regulars sprinkled here and there (honestly - I would love to meet at least one of you weirdos someday). I don’t target a particular market niche, I don’t have a content or SEO strategy, and I opportunistically monetize to cover the website running costs.

What’s crazy is that nearly half of that traffic is organic search for a quick note I jotted down back in 2013! I could spend weeks putting together a literary love child and not even get a fraction of attention this back of the napkin screenshot received.

Back in 2012 I used this blog as a way to categorize my discoveries about developing software, and later what I’ve learned about working with people. Over the years I gradually expanded to travel (including that time I lived in my car for a year), and general things I like - like keyboards or tabletop role playing games.

I’m putting this together to remind myself that I love writing and why I started this blog. That not every piece of content requires hours of research, or even have a clear audience in mind for that matter.

I wouldn’t have blinked an eye flooding this blog with unfiltered thoughts, perspectives, and experiences if it wasn’t attached to my real world persona. It’s easy to throw out something I perceive as “unworthy” anonymously. It’s mortifying to publish such a piece at my-first-name-last-name-dot-com.

So here it is, the first post I wrote purely for fun! I’m still taking a light editing pass on it, and drastically cutting it down in size - I’m not a savage! But unlike in my usual writing, there’s no clear value I’m providing or a skill I’m trying to teach - and that’s a huge step for me. Feels good!

Vortex Core 40% keyboard

This review is written entirely using Vortex Core, in Markdown, and using Vim.

Earlier this week I purchased Vortex Core - a 40% keyboard from a Taiwanese company Vortex, makers of the ever popular Pok3r keyboard (which I happen to use as my daily driver). This is a keyboard with only 47 keys: it drops the numpad (what’s called 80%), function row (now we’re down to 60%), and the dedicated number row (bringing us to the 40% keyboard realm).

Words don’t do justice to how small a 40% keyboard is. So here is a picture of Vortex Core next to Pok3r, which is an already a small keyboard.

At around a $100 on Amazon it’s one of the cheaper 40% options, but Vortex did not skimp on quality. The case is sturdy, is made of beautiful anodized aluminum, and has some weight to it. The keycaps this keyboard comes with feel fantastic (including slight dips on F and J keys), and I`m a huge fan of the look.

I hooked it up to my Microsoft Surface Go as a toy more than anything else. And now I think I may have discovered the perfect writing machine! Small form factor of the keyboard really compliments the already small Surface Go screen, and there’s just enough screen real estate to comfortably write and edit text.

I’ve used Vortex Core on and off for the past few days, and I feel like I have a solid feel for it. Let’s dig in!

What’s different about it?

First, the keycap size and distance between keys are standard: it’s a standard staggered layout most people are used to. This means that when typing words, there is no noticeable speed drop. In fact I find myself typing a tiny bit faster using this keyboard than my daily driver - but that could just be my enthusiasm shining through. I hover at around 80 words per minute on both keyboards.

That is until it’s time to type “you’re”, or use any punctuation outside of the :;,.<> symbols. That’s right, the normally easily accessible apostrophe is hidden under the function layer (Fn1 + b), and so is the question mark (Fn1 + Shift + Tab). -, =, /, \, [, and ] are gone too, and I’ll cover those in due time.

On a first day this immediately dropped my typing speed to around 50 words per minute, as it’s completely unintuitive at first! In fact, I just now stopped hitting Enter every time I tried to place an apostrophe! But only after a few hours of sparingly using Vortex Core I’m up to 65 WPM, and it feels like I would regain my regular typing speed within a week.

Despite what you might think, it’s relatively easy to get used to odd key placement like this.

Keys have 4 layers (not to be confused with programming layers), and that’s how the numbers, symbols, and some of the more rarely used keys are accessed. For example, here’s what the key L contains:

- Default layer (no modifiers):

L Fn1layer:0Fn1 + Shiftlayer:)Fnlayer:right arrow key

The good news is that unlike many 40% keyboards on the market (and it’s a rather esoteric market), Vortex Core has key inscriptions for each layer. Something like Planck would require you to print out layout cheatsheets while you get used to the function layers.

As I continue attempting to type, numbers always take me by surprise: the whole number row is a function layer on top of the home row (where your fingers normally rest). After initially hitting the empty air when attempting to type numbers, I began to get used to using the home row instead.

The placement mimics the order the keys would be in on the number row (1234567890-=), but 1 is placed on the Tab key, while = is on the Enter. While I was able to find the numbers relatively easily due to similar placement, I would often be off-by-one due to row starting on a Tab key.

Things get a lot more complicated when it comes to special symbols. These are already normally gated behind a Shift-press on a regular keyboard, and Vortex Core requires some Emacs-level gymnastics! E.g. you need to press Fn1 + Shift + F to conjure %.

Such complex keypresses are beyond counter-intuitive at first. Yet after a few hours, I began to get used to some of the more frequently used keys: ! is Fn1 + Shift + Tab, - is Fn1 + Shift + 1, $ (end of line in Vim) is Fn1 + Shift + D, and so on. Combining symbols quickly becomes problematic.

It’s fairly easy to get used to inserting a lone symbol here and there, but the problems start when having to combine multiple symbols at once. E.g. writing an expression like 'Fn1 + Shift + D' = '$' above involves the following keypresses: <Fn1><Esc> F N <Fn1><Tab> <Fn1><Shift><Enter> S H I F T <Fn1><Shift><Enter> D <Fn1><Esc> <Fn1><Enter> <Fn1><Esc> <Fn1><Shift>D <Fn1><Esc>. Could you image how long it took me to write that up?

The most difficult part of getting used to the keyboard is the fact that a few keys on the right side are chopped off: '/[]\ are placed in the bottom right of the keyboard, to bnm,. keys. While the rest of the layout attempts to mimic the existing convention and only shifting the rows down, the aforementioned keys are placed arbitrarily (as there’s no logical way to place them otherwise).

This probably won’t worry you if you don’t write a lot of code or math, but I do, and it`s muscle memory I’ll have to develop.

There are dedicated Del and Backspace keys, which is a bit of an odd choice, likely influenced by needing somewhere to place the F12 key - function row is right above the home row, and is hidden behind the Fn1 layer.

Spacebar is split into two (for ease of finding keycaps I hear), and it doesn’t affect me whatsoever. I mostly hit spacebar with my left thumb and it’s convenient.

Tab is placed where the Caps Lock is, which feels like a good choice. After accidentally hitting Esc a few times, I got used to the position. Do make sure to get latest firmware for your Vortex Core - I believe earlier firmware versions hides Tab behind a function layer, defaulting the key to Caps Lock (although my keycaps reflected the updated firmware).

So I’d say the numbers and the function row take the least amount of time to get used to. It’s the special characters that take time.

Can you use it with Vim?

I’m a huge fan of Vim, and I even wrote a book on the subject. In fact, I’m writing this very review in Vim.

And I must say, it’s difficult. My productivity took a hit. I use curly braces to move between paragraphs, I regularly search with /, ?, and *, move within a line with _ and $, and use numbers in my commands like c2w (change two words) as well as other special characters, e.g. da" (delete around double quotes).

The most difficult combination being spelling correction: z= followed by a number to select the correct spelling. I consistency break the flow by having to press Z <Fn1><Enter> <Fn1><Tab> or something similar to quickly fix a misspelling.

My Vim productivity certainly took a massive hit. Yet, after a few days it’s starting to slowly climb back up, and I find myself remembering the right key combinations as the muscle memory kicks in.

I assume my Vim experience translates well into programming. Even though I write code for a living, I haven’t used Vortex Core to crank out code.

Speaking of programming

The whole keyboard is fully programmable (as long as you update it to the latest firmware).

It’s an easy process - a three page manual covers everything that’s needed like using different keyboard layers or remapping regular and function keys.

The manual also mentions using right Win, Pn, Ctrl, and Shift keys as arrow keys by hitting left Win, left Alt, and right spacebar. Vortex keyboards nowadays always come with this feature, but due to small form factor of the keys (especially Shift), impromptu arrow keys on Vortex Core are nearly indistinguishable from individual arrow keys.

Remapping is helpful, since I’m used to having Ctrl where Caps Lock is (even though this means I have to hide Tab behind a function layer), or using hjkl as arrow keys (as opposed to the default ijkl).

It took me only a few minutes to adjust the keyboard to my needs, but I imagine I will come back for tweaks - I’m not so sure if I’ll be able to get used to special symbols hidden behind Fn1 + Shift + key layer. Regularly pressing three keys at the time (with two of these keys being on the edge of the keyboard) feels unnatural and inconvenient right now. But I’m only a few hours in, and stenographers manager to do it.

Living in the command line

The absence of certain special characters is especially felt when using the command line. Not having a forward slash available with a single keypress makes typing paths more difficult. I also use Ctrl + \ as a modifier key for tmux, and as you could imagine it’s just as problematic.

Despite so many difficulties, I’m loving my time with Vortex Core! To be honest with myself, I don’t buy new keyboards to be productive, or increase my typing speed. I buy them because they look great and are fun to type on. And Vortex Core looks fantastic, and being able to cover most of the keyboard with both hands is amazing.

There’s just something special about having such a small board under my fingertips.

One page TTRPG prep

I’ve been running table top role playing games for over a decade on and off, and I’ve settled into a prep routine. It’s heavily borrowed, if not outright stolen from other GMs on the Internets, and I encourage you to steal and adapt what I’m doing as well. If I had to guess, this way of preparation is stolen directly from the Lazy GM’s table.

I’ve learned that in order to run a successful session, I need to prepare the following:

- Discoveries: What my players need to discover.

- Scenes: Where things happen.

- Clues: Some thoughts on how to expose the discoveries.

- Key NPCs: Some names and primary aspects of non player characters.

- Enemies: A few stat blocks or references to enemies.

And all of the above fits on a single page: I find it enough to run the session while providing player freedom without having to completely invent everything on the fly.

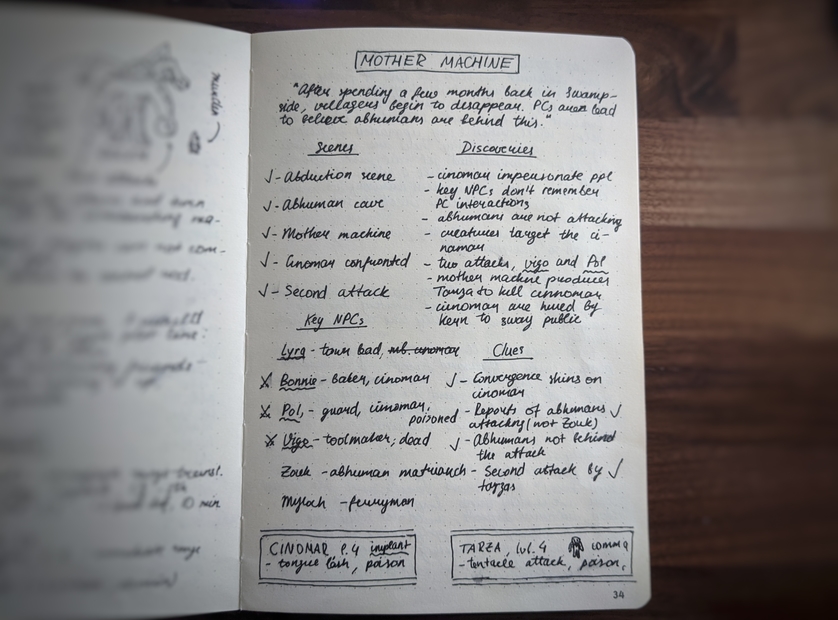

Here’s an example for a Numenera game I ran a few weeks ago, where I adopted the idea behind the “Mother Machine” module from “Explorer’s Keys: Ten Instant Adventures for Numenera”:

In this example, the village under players’ protection is attacked by “tarza”, never before seen monsters. For historical reasons, my players are initially expected to blame a neighboring tribe of abhumans for the attack. The monsters are in fact part of a defense mechanism which is attempting to exterminate so-called “cinomar”: doppelgangers who are impersonating some of the villagers.

Discoveries

This section cover key information and plot twists, and it’s the one I start with. I use it as a tool to outline the adventure, and it’s helpful to refer to if I get stuck.

These are the details players should unravel throughout the session, and I usually check them off as the players find them. In fact, you’ll see I check off most bullet points on the page as the session plays out.

Although, now that I’m looking at the example I provided, the discoveries are not marked. I guess I’m not very consistent.

Scenes

This is the primary tool I use during the game. Scenes outline encounters - be they social, exploratory, or combat related. Scenes help me form a general idea about locations the adventure will be taking a place in, as well as some of the key action sequences.

I often use scenes to help me control pacing: If I’m running a 3 hour game with roughly 5 scenes planned, I know to hurry up if I haven’t gotten to any of them at a one hour mark. It’s often a good time to narrate a time skip, tie up an ongoing investigation, or suggest a direction for the party to move in.

Now this doesn’t mean that the adventure is limited to these scenes. If (or more accurately “when”) the adventure goes off rails, I reskin these scenes or use them for an inspiration to quickly throw together a new scene.

Clues

Clues are ideas for how to surface discoveries. These are not strictly necessary if you’re really creative, but if you’re anything like me - these are a godsend.

Clues are concrete ways for players to discover plot points outlined in “Discoveries”. “The assassin had a letter signed by the big bad”, or “The drunken sailor lets slip about a cult in the town”. I aim for one clue per discovery, but it’s not a hard rule.

The list should not be exhaustive, and should not be strictly followed - I treat this section as an exercise in creativity. I find it more impactful to try to come up with clues as I play – players often search in places I haven’t thought of - so I place the clue to a discovery wherever the players are looking.

Key NPCs

This is a list of key non player characters relevant to the game. I try to keep number of named NPCs low to help my players remember them better, and I try to use as many recurring NPCs as humanly possible.

I usually add an aspect for each of the NPCs - a short description in a few words, something that makes them stand out in some way.

This list doesn’t have to be exhaustive – you’ll probably want to use the running list of NPCs for your campaign as a supplement for unexpected recurring characters, and a list of pre-generated names for unexpected encounters.

Enemies

The last thing is enemy stat blocks for quickly referencing if (again, “when”) a combat breaks out.

For Numenera, these are not particularly complex - most enemies are described by a single number and a few key things about them. I’d imagine for D&D and other combat focused systems you’d want to put a bit more effort in making sure you’re building balanced combat encounters, so that would warrant a section of its own.

I tend to spend a little under 20 minutes on all of the above, and I get to use it in three out of four games – the fourth one tends to go off the rails completely, and I’m okay with it. Without investing as much time into prep, it’s easy to be taken on a ride by the players.

Numenera for D&D DMs

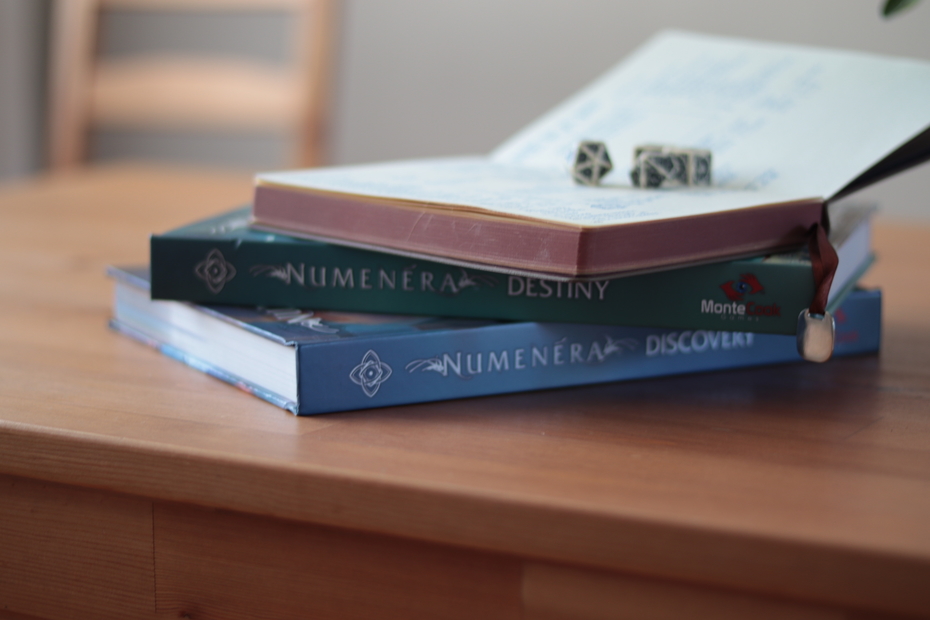

We’ll be having our 12th Numenera game later this week, and ultimately Numenera proved to be easier to run compared to Dungeons and Dragons. I just wrote about teaching Numenera to my D&D Players, and it’s worth sharing my own experience getting familiar with the system as a GM.

Story is the king

As a soft tabletop role playing system, Numenera focuses on storytelling over the rules. Every dozen pages or so the rulebook tirelessly reminds the reader: “if rules get in the way of having fun or creating an interesting story, change them”.

Depending on your DMing style and party’s needs you can get away with a lackluster D&D story: even if the party is saving the world from the evil wizard for the umpteenth time, there are decently fun board game mechanics to fall back to. Monster Manual and Dungeon Master’s Guide provide hundreds of fun creatures and straightforward rules for building balanced encounters, and there’s plenty of clearly laid out tactical choices and fun abilities to play with in combat. Take out the story telling, and you’ll have a pretty fun tactical game (isn’t that what Gloomhaven did?).

But there really aren’t enough rules in Numenera to make combat engaging without additional emotional investment from the players. This makes building something people care about paramount to having fun with Numenera. The usual storytelling rules apply: build a compelling story and let the players take you for a ride if their story is better (and it often is).

You don’t need combat encounters

Numenera supports a breadth of combat options only limited by players’ imagination, but many of them are not immediately obvious, and newer players will struggle to utilize the full spectrum of abilities available to them. Combat uses the same rules as the rest of the game, and is not more mechanically complicated (you decide what you want to do, you try to beat certain difficulty, you fail spectacularly).

Because of this combat is completely optional in Numenera. Don’t force the players into combat if they find a way to avoid it. Make them feel great about their cunning victory! We’ve had great and satisfying sessions without a single thrown punch.

That being said, it does not mean Numenera combat can’t be fun. One of the most engaging sessions we had consisted of a single battle – when a monster hunter delve decided to hunt a morigo – an enormous beast whose entire purpose was to scare the players from entering an area. The fight was deadly and arduous, but the characters triumphed against all odds.

You still need to know all the rules

Latest edition of Numenera consists of two books, titled Discovery and Destiny – both targeted at the game master. The two tomes combined clock in at a whopping 800 pages of reading material. However only 29 of the lavishly illustrated pages outline the rules. The rest provide supplemental material: character customization options, the setting, realizing the world, building compelling narrative, and so on.

But because of this it’s even more important for the GM to be intimately familiar with the rules. Since there was so little to learn, the group of players I’m GMing for decided that it’s not worth reading the rules. Thankfully Numenera rules are easy to teach, but you still need to know the rules.

A lesson in assertiveness

When playing D&D, I’d often outsource dealing with the rules by designating a rules arbiter from the player ranks. This doesn’t seem to work in Numenera. There rules are guidelines, and logic dictates how each situation is resolved. GM has the fullest picture of the situation in their head, making it crucial for them to adjudicate.

It certainly took me time to get used to putting my foot down, even if the players might not always agree with the decision. I found myself frequently making snap judgements, and following up with any adjustments after the session – rpg.stackexchange.com turned out to be a great resource for asking questions about the spirit of the rules.

Don’t dungeon crawl

“And then you enter another room which contains X, Y, and Z”. That’s the line I kept repeating during our weakest session to date. Numenera does not land itself well to a typical dungeon crawl.

Monte Cook published a few sets of fantastic adventures which require little to no prep to run. Weird Discoveries and Explorer’s Keys let you run adventures with minimal prep. But it doesn’t help that these come with standard dungeon-style maps.

Turned out a more engaging way to describe locations is: “Over the next few hours you explore the obelisk, and within the maze of the corridors you note a number of places of interest. There are…”. The rulebook has my back on this too.

Bring out the weird

The most difficult part of running Numenera games was highlighting how weird the Ninth World truly is. My players kept falling back into thinking of Steadfast as a standard medieval fantasy setting, and that’s in many ways because I kept forgetting to tell them how weird everything is.

How a mountain in the background is a machine from the prior world. How the water in the swamp was a conduit for some unimaginable device. And how none of it should make sense.

In earlier games, I had explanations ready for everything odd occurring in the world. I’ve been slowly making an effort to make the world more mysterious, more unexplainable, and more weird. This seems to be the key to running a successful Numenera game, and I’m still trying to find the balance between a consistent, but weird world characters live in.